Deep learning has enabled the decoding of language from intracranial brain recordings, but achieving this with non-invasive recordings remains an open challenge.

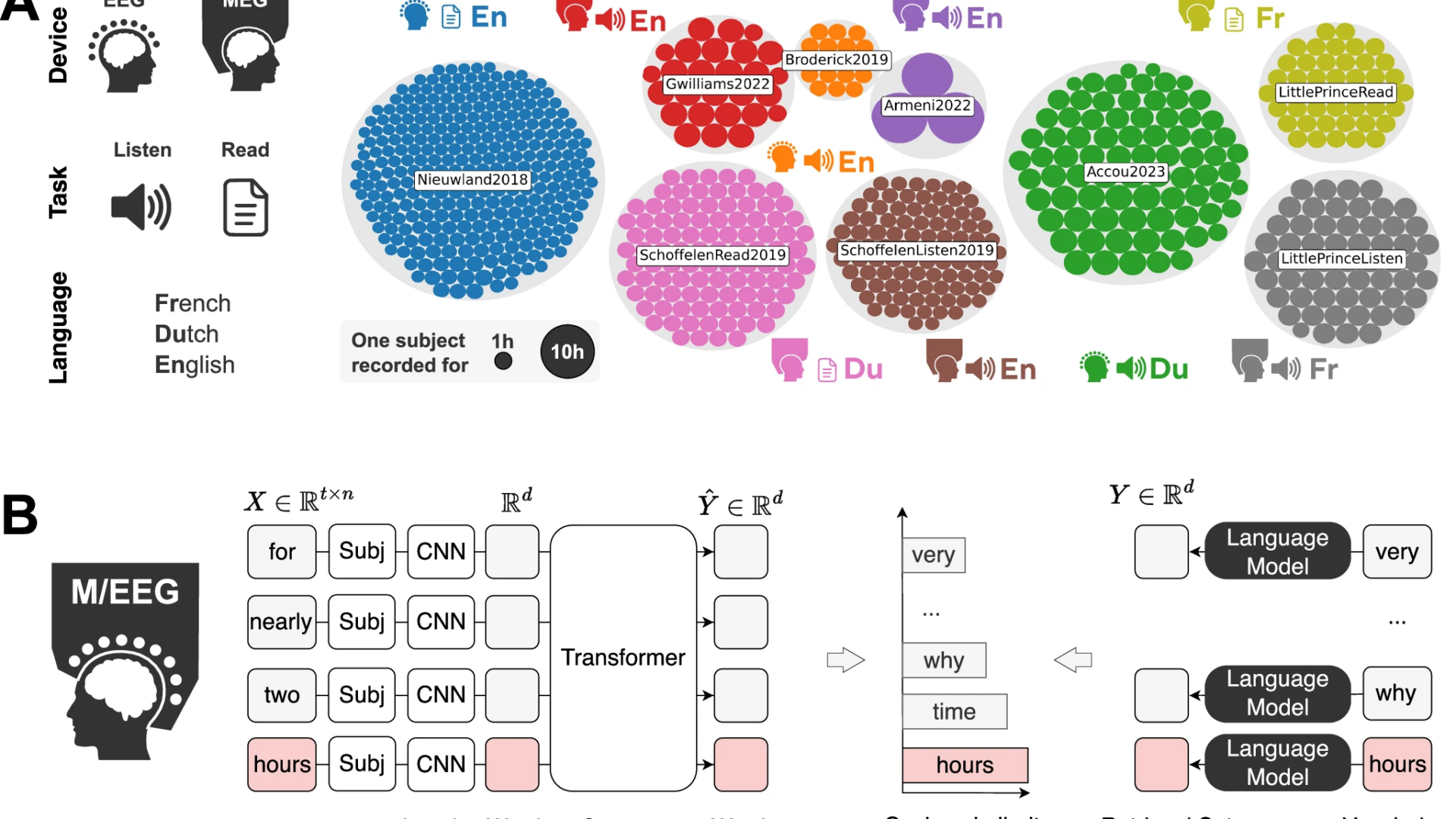

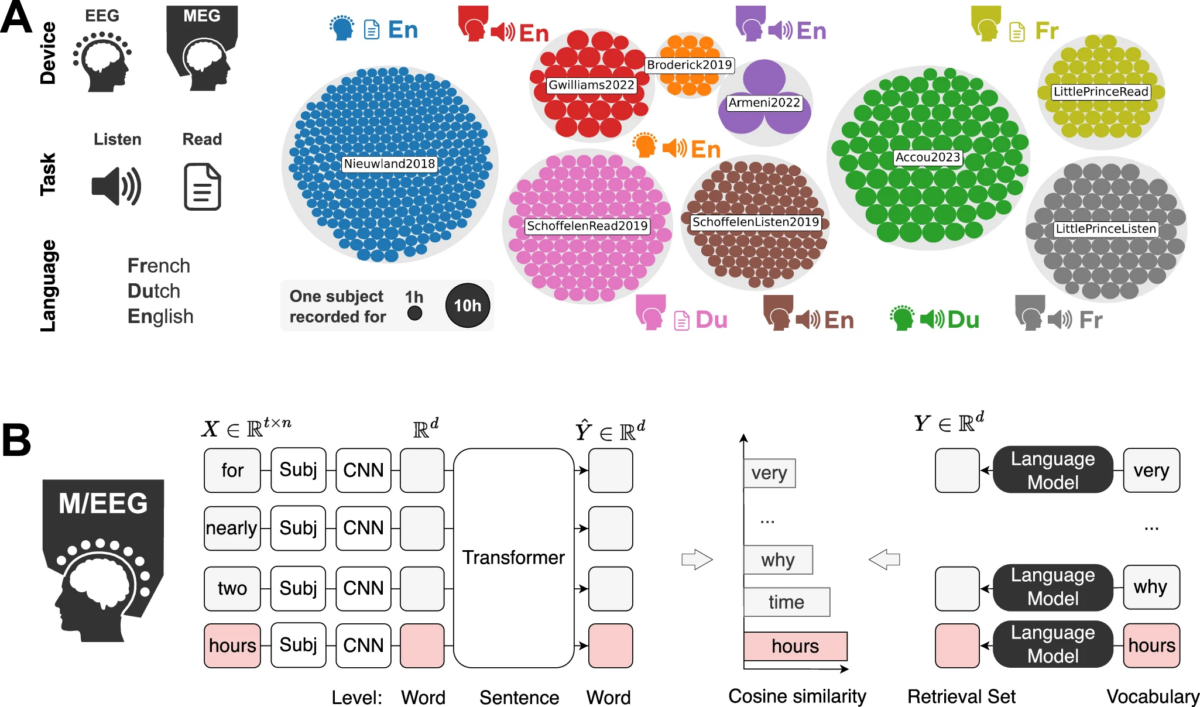

Very recently and in an Open Access Publication (CC-BY 4.0 License), researchers from Meta-AI introduced a deep learning pipeline that decodes individual words from electroencephalography (EEG) and magnetoencephalography (MEG) signals. They evaluate the approach on seven public datasets and two datasets collected by the team, encompassing a total of 723 participants who read or listened to five million words across three languages. The model consistently outperforms existing methods across participants, recording devices, languages, and tasks, and can decode words that were absent from the training data. The analyses highlight the importance of both the recording device and the experimental protocol: MEG recordings and reading tasks are easier to decode than EEG recordings and listening tasks, and decoding performance improves steadily with larger training datasets and greater averaging during testing. Overall, the findings outline both the progress made and the remaining challenges in building non-invasive brain decoders for natural language.

| More information: d’Ascoli, S., Bel, C., Rapin, J. et al. Towards decoding individual words from non-invasive brain recordings. Nat Commun 16, 10521 (2025). https://doi.org/10.1038/ |

| Journal information: Nature Communications |

| Article Source: Nature Communications |