Language provides the most revealing window into the ways humans structure conceptual knowledge within cognitive maps. Harnessing this information has been difficult, given the challenge of reliably mapping words to mental concepts. Artificial Intelligence large language models (LLMs) now offer unprecedented opportunities to revisit this challenge. LLMs represent words and phrases as high-dimensional numerical vectors that encode vast semantic knowledge.

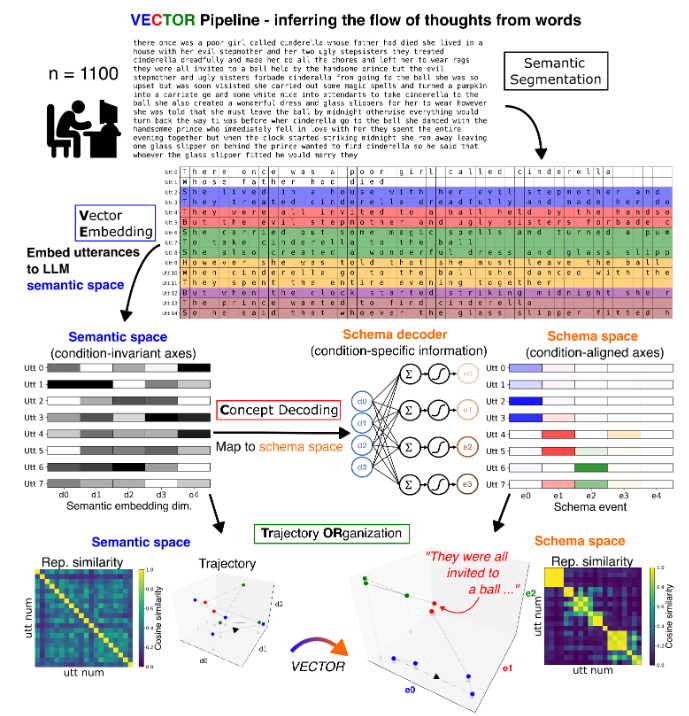

To harness this potential for cognitive science, scientists introduced VECTOR, a computational framework that aligns LLM representations with human cognitive map organisation. VECTOR casts a participant’s verbal reports as a geometric trajectory through a cognitive map representation, revealing how thoughts flow from one idea to the next. Applying VECTOR to narratives generated by 1,100 participants, they showed these trajectories have cognitively meaningful properties that predict paralinguistic behaviour (response times) and real-world communication patterns. The study suggested that this approach approach opens new avenues for

understanding how humans dynamically organise and navigate conceptual knowledge in naturalistic settings.

IMG SOURCE: Charting trajectories of human thought using large language models.

For inner-speech decoding, this kind of framework could offer a powerful new layer of interpretation. Instead of trying to decode isolated words from brain signals, future systems could aim to reconstruct trajectories through conceptual space, using AI-derived semantic maps as a guide. Researchers suggest this could make decoded inner speech more robust and meaningful, bringing brain–computer interfaces closer to capturing the structure and dynamics of thought rather than just individual words.

| More information: dNour, M. M., McNamee, D. C., Fradkin, I., & Dolan, R. J. (2025). Charting trajectories of human thought using large language models. arXiv preprint arXiv:2509.14455. |

| Journal information: Arxiv Preprint |

| Article Source: Arxiv |